by Daniel Marques

With the rise of social media and digital platforms over the last 10-15 years, privacy has become a hot topic of conversation among scholars, media outlets and the general population. Speaking through personal experience, I was struck with curiosity when I first came across the idea of “Privacy by Design.” As a designer, the idea of thinking about privacy and design together intrigued me. Privacy by design is a framework aimed at developers, designers, and project managers who seek to engineer privacy in the early stages of product development, especially of digital artifacts. Initially proposed by Ann Cavoukian, former information and privacy commissioner for Ontario, Canada, this framework has been widely discussed by scholars from different fields such as information security, user interface design, computer science, computer engineering, project management and so forth. Even though the idea seems interesting— how can we engineer and protect privacy in the 21st century?—my initial wonder rapidly turned into skepticism. How can privacy by design be widely adopted when most tech companies rely on personal data collection and processing as the foundation of their business model?

Or, to put it differently, how can we be more cautious about digital platforms—e.g. businesses —tinkering with privacy and data protection? The same Mark Zuckerberg who, in 2010, asserted that privacy “is no longer a social norm,” had no problem making “the future is private” his main argument during 2019’s F8 conference.

Even though privacy is considered a fundamental human right by different constitutions around the globe, it is essential to note that the human subject cannot be taken as the unit of analysis. We must account for how the human comes to matter in a larger assemblage of other humans, institutions, and non-humans. The human, therefore, is never pure, it emerges through hybrid entanglements (BARAD, 2007), translations (LATOUR, 2012) and radical mediations (GRUSIN, 2005). Failing to acknowledge that is the first mistake when it comes to understanding privacy. To be able to establish the limits between the “public” and the “private,” then, we need to account for the agency of those assemblages and networks. We need “stuff,” “matter,” to have a private life. Privacy is always materially situated, as things help us make sense of, and (co)produce, social reality.

Let’s look in particular to assemblages involving media and communication technologies. Some scholars, for example, trace the emergence of modern privacy through the development of print media and the novel as a narrative format. “The right to be let alone,” an idea that arose in the late 19th century, is heavily influenced by the media landscape of that time:

“Recent inventions and business methods call attention to the next step which must be taken for the protection of the person, and for securing to the individual what Judge Cooley calls the right “to be let alone.” Instantaneous photographs and newspaper enterprise have invaded the sacred precincts of private and domestic life; and numerous mechanical devices threaten to make good the prediction that “what is whispered in the closet shall be proclaimed from the house-tops.” (WARREN; BRANDEIS, 1890)

It is not hard to recognize the assemblage here. Different things fall into place to establish the border between private and public spaces: the middle-class home, mass media, journalism, photography, gossip magazines, celebrity culture, and so forth. Even though this is a drastically different media landscape than that of print, the relationship between privacy and media technologies remains somewhat evident. This poses important questions: what kind of media environment (or ecology) do we have today, and how does it impact our enactment of privacy? What are we talking about when it comes to the contemporary media landscape?

The 21st Century Media Landscape: The Rise of the Platform

Since the rise of mass media, media scholarship teaches us that “mediatization” has progressed further and further (HJARVARD, 2014). We have today a much more intricate and complicated relationship between media and other social institutions. Mediatization theory helps us understand how the social comes to be infused by media logic. Media, then, is to be understood not as a mere instrument for communication, but as a powerful social institution capable of exerting change on other social institutions (family, religion, nationality, education, leisure) and on social reality itself.

Things get even more complicated when we account for the rise of digital media platforms. Building upon the idea of deep mediatization (COULDRY; HEPP, 2017), it becomes harder and harder to pinpoint what media technologies are, when they present themselves, and where they act to shape social life. Modern mass media became easy to spot: TV, radio, news channels, magazines, advertising, and movies are undoubtedly media products. But what about relationship apps? Wearable devices? Amazon Prime subscriptions? Google maps? Tesla cars? Are those media artifacts as well? As we make sense of the social, these are critical questions.

What we have now, then, is a much more nebulous media landscape, somewhat different from mass media and even from the early days of the internet or Web 2.0. The fast and predatory expansion of digital media conglomerates (especially the Big Five: Google, Amazon, Facebook, Apple, and Microsoft) is altering how society functions, understands itself, and enacts relations of power. They are also reconfiguring the threshold between public and private. As we step into a platform society, digital media become even more embodied, less transparent, and more pervasive than ever before. A significant portion of our social actions become highly hypermediated by digital technologies, even if we are not aware of it. The double logic of remediation (BOLTER AND GRUSIN, 2001) is in full power here.

PDPA: Platformization, Datafication, and Performative Algorithms

The “platformization” of society, then, can be regarded as the main characteristic that defines the contemporary media landscape. Lemos (2019a, 2019b) refers to this new turn in digital culture (the “platform turn”) in three main points:

- The platformization of society itself, followed by the economic and political rise of platforms such as Facebook, Google, and Amazon;

- Datafication, the process by which social life gets translated into digital data through several different data practices and;

- The widespread deployment of performative algorithms, computational entities that provide “intelligence” for digital media, highly complex social and technical assemblages present in many different media artifacts today.

Contemporary digital culture, then, relies heavily on platformization, datafication, and performative algorithms (PDPA) to be performed (LEMOS, 2019a, 2019b). It is troublesome to think about how deep the roots of the platform society have grown, as a significant part of our daily life runs through highly sophisticated algorithms, data practices, and digital platforms. What are the consequences caused by the emergence of PDPA and the general platformization of everyday life?

The keywords here are control, capital, governance, politics, and business models. As we witness the rise of the platform society, we also witness the rise of platform capitalism, surveillance capitalism, or data capitalism. When data became the new oil—i.e. a highly valuable commodity—tech and other kinds of companies rapidly adapted in order to extract value from it. Data extractivism can be regarded not only as a new version of capitalism but also as a new enactment of colonialism and imperialism—they are mostly interdependent. The rush to produce, collect, and analyze data comes at multiple social, economic, and political costs (SADOWSKI, 2019; COULDRY & MEJIAS, 2018).

The platformization of everyday life becomes a way to transform citizens into users/consumers. When life comes to matter through digital platforms, we become part of this assemblage; we act through and with those mediators. They steer our action, helping us perform, enact and embody in specific particular ways. Digital platforms are thus not just intermediaries in a Latourian sense, but highly skilled mediators. We are continually negotiating with them to control who has access to our private life and when. Our understanding of privacy, then, becomes prescribed by media companies. Prescription, for Latour (1992), entails “the moral and ethical dimension of mechanisms” (p. 157), a way in which power can be delegated through socio-technical networks. A PDPA media system, therefore, encodes certain prescriptions of how privacy is to be understood.

This means that privacy is not over—far from it—but it takes a different shape as platform conglomerates delegate different prescriptions. As I highlighted earlier, according to Mark Zuckerberg “the future is private.” What is at stake here is a test of strength between Facebook’s privacy prescription and our capacity to contest it. Data capitalism carefully designs our potential control over personal data as we are continuously primed to delegate our enactment of privacy to digital platforms.

Control, modulation, and governance are not to be understood as abstract or immaterial. If we have established that privacy is always contingent on materiality (a more-than-human privacy), a sort of “prescribed privacy” may also be materially situated. Platform society and data capitalism cannot be grasped—and therefore disputed—if conceived as purely “immaterial.” They can only be experienced through things. They make themselves visible in privacy policies, code, product design, public speeches (Zuckerberg’s “the future is private!”), patents, lawsuits, predatory inclusion, hostile takeovers, pricing, conferences and conventions, and user interface design. The last one is of particular interest. User interfaces become an essential space for critical academic inquiry and also political action.

The Materiality of Platform Power: Malicious User Interfaces

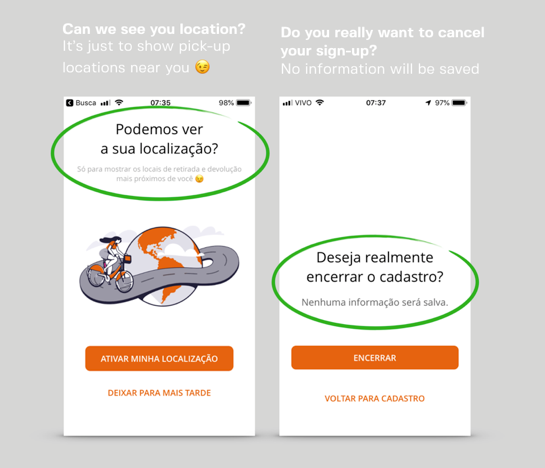

Acting as mediators, user interfaces ultimately attempt to modulate levels of friction, steering user behavior. Friction is an important word here. It stands for the discrepancy between the amount of effort versus the resistance of a particular environment when action is performed. Designers and developers design for certain levels of friction, since user interfaces are always trying to accomplish something, to produce specific effects. Interfaces are not just instruments. They are entangled with human -subjects to perform actions, and therefore agency can only be located in the entanglement itself. User interfaces are the more evident material layer when it comes to digital platforms. That is one of the reasons why user interfaces are a fruitful site for critical scholarship and political inquiry. Through them, we can perceive breaches and identify controversies, gaining some insight into other material layers, such as algorithms, privacy policies, or monetization practices. Expanding the idea that digital platforms can prescribe privacy, we could argue that user interfaces become valuable stakeholders that help this project come to fruition. User interfaces actively negotiate with the user for the enactment of a particular idea of private life. As expected, this enactment fulfills not the user best interest’s, but those of the platform.

Those interfaces are, as I and a colleague have been framing them, malicious interfaces (LEMOS, MARQUES; 2019, forthcoming). They are composed of interaction strategies designed to reproduce the power asymmetry between user and platform. Malicious interfaces are usually well designed: they actively help users perform particular tasks; that is part of their scheme. The goal of a Malicious interface is to maximize data production and collection, prescribing the need to produce more and more data. They build the right dispositions in the user to do so. Malicious interfaces are performative, since they are simultaneously a byproduct of platformization and also essential agents within that same process.

Malicious interfaces, as a theoretical framework, were initially identified by the name dark patterns by Harry Brignull in 2010: “this is the dark side of design, and since these kind of design patterns do not have a name, I am proposing we start calling them dark patterns.” An exciting branch of scholarship flourished after this. Scholars, designers, and developers started looking into these dark patterns in order to build a common terminology and taxonomy. Even though Brignull initially developed the concept to address problems in e-commerce, dark patterns became a powerful tool to think about privacy problems through and with user interfaces.

Most scholars, though, fail to recognize how dark patterns and user interfaces are entangled with a more extensive network of mediations, in particular those of the PDPA. Dark patterns do not just direct the user into random errors; they seek modulation, control, and governance over the interaction, sometimes in an affective way. It is not just a problem of user literacy either, since some of those interfaces are getting more and more inescapable. The shift from “dark patterns” to “malicious interfaces” seeks to highlight their symbiotic relationship with data capitalism.

They also vary in degrees of aggression. It’s possible to identify multiple levels of intensity when it comes to subverting user control over personal data. Some of them seem more disruptive in their tactics than others. Some of them collect unnecessary user data, but in accordance with the service provided: location data when it comes to parking apps, for example. Others stimulate personal data sharing with third parties. This is a common tactic in data capitalism, as apps generally prompt the user to register using Facebook or Google’s accounts, for example. In more severe cases, we find interfaces that collect data unrelated to the apps’ primary function or seek to obfuscate the data practice in some way. There’s no need for an app that deals with public transportation to collect screen time data, for example. Permissions like that are usually buried deep in documents like User Agreements and Privacy Policies. These apps expose more bluntly the workings of data capitalism and, therefore, are less common.

Generally, we’re seeing a potential “naturalization” of malicious interfaces, mostly due to their omnipresence. This is troublesome, mainly because it makes visible how large and dominant platform capitalism has become. We are beholding the opposite of privacy by design. Data capitalism has grown so powerful that designers and developers understand their data collection practices as “good interaction design.” Instead of engineering privacy from the bottom up, most developers have no other option than to conform, (re)producing the governance and power practices carried out by digital platforms.

The Costs of Platformization

It is not just important but fundamental for social researchers and scholars in the digital humanities to critique and interrogate these new kinds of mediation and the performances of digital platforms. For one, our understanding of privacy needs to account for user interfaces and the agential capabilities of data capitalism as a whole. In order to exercise “the right to be let alone,” we need better conditions to assess what “alone” means in the contemporary media landscape. Human privacy should be regarded as a complex assemblage of humans and non-human actors, a more-than-human privacy.

Additionally, as any predatory capitalist practice, data capitalism helps perpetuate different systems of inequality and oppression, especially in the Global South and other sites of endangered democracies. Even though digital platforms usually frame their work as “revolutionary,” “innovative,” and “empowering for the user,” in reality, we have mostly the same old drive for capital and power accumulation.

Last, but not least, data capitalism is also a severe hazard for the natural environment. The carbon footprint derived from data practices is getting more and more critical every day. Scholars are beginning to address this as the “Capitalocene,” complicating our understanding of the Anthropocene by accounting for how media technologies establish an ecologically destructive infrastructure around the globe.

The platformization of everyday life, then, helps us weirdly reconnect with the world. The digital infrastructures that remediate our privacy, our household, and everyday life are also critically reshaping earth and its geopolitics. We may lose not just our intimacy and privacy, but ultimately our conditions of existence on the planet. This is the rise of platform necropolitics.

Daniel Marques is a Brazilian assistant professor of design and digital media in the Center of Culture, Languages, and Applied Technologies at the Federal University of Recôncavo da Bahia (CECULT/UFRB) and an affiliated researcher at Lab404. He is also a PhD candidate in Digital Culture and Communication at the Federal University of Bahia (PPGCCC/UFBA) and a Fulbright Visiting Research Student at UWM’s Center for 21st Century Studies.

REFERENCES

LATOUR, B. ‘‘Where Are the Missing Masses? The Sociology of a Few Mundane Artifacts,” in Wiebe E. Bijker and John Law (eds.), Shaping Technology/Building Society: Studies in Sociotechnical Change (Cambridge, Mass.: MIT Press, 1992), 225–258.

LATOUR, B. “Reagregando o Social: uma introdução à teoria do Ator-Rede.” EDUFBA, 2012.

LEMOS, A. Epistemologia da Comunicação, Neomaterialismo e Cultura Digital. Forthcoming, 2019a.

LEMOS, A. Plataformas, dataficação e performatividade algorítmica (PDPA). Desafios atuais da cibercultura. Forthcoming, 2019b.

GRUSIN, R. “Radical Mediation,” Critical Inquiry 42, no. 1 (2015), 124–148.

BARAD, K. M. Meeting the Universe Halfway: Quantum Physics and the Entanglement of Matter and Meaning (Durham: Duke University Press, 2007).

BOLTER, J. D.; GRUSIN, R. Remediation: Understanding New Media (Boston: MIT Press, 1999).

COULDRY, N.; MEJIAS, U. A. “Data Colonialism: Rethinking Big Data’s Relation to the Contemporary Subject,” Television & New Media, 20(4), 336–349.

HJARVARD, S. “Mediatization: Conceptualizing Cultural and Social Change,” Matrizes 8, no. 1 (2014): 21–44.

LEMOS, A; MARQUES, D. “Malicious Interfaces: Personal Data Collection through Apps,” V!RUS, São Carlos, forthcoming, 2019.

PROST, A. “Public and Private Spheres in France,” in ARIÈS, P; DUBY, G (eds.), A History of Private Life V: Riddles of Identity in Modern Times (Cambridge: Harvard University Press, 1991).

WARREN, S. D. et al. “The Right to Privacy Today,” Harvard Law Review 43, no. 2 (1890), 297.

SADOWSKI, J. “When Data is Capital: Datafication, Accumulation, and Extraction,” Big Data & Society, 6, no. 1 (2019), 1-15.